Designing Scalable and Loosely Coupled Architectures on AWS: A Complete Guide for SAA-C03

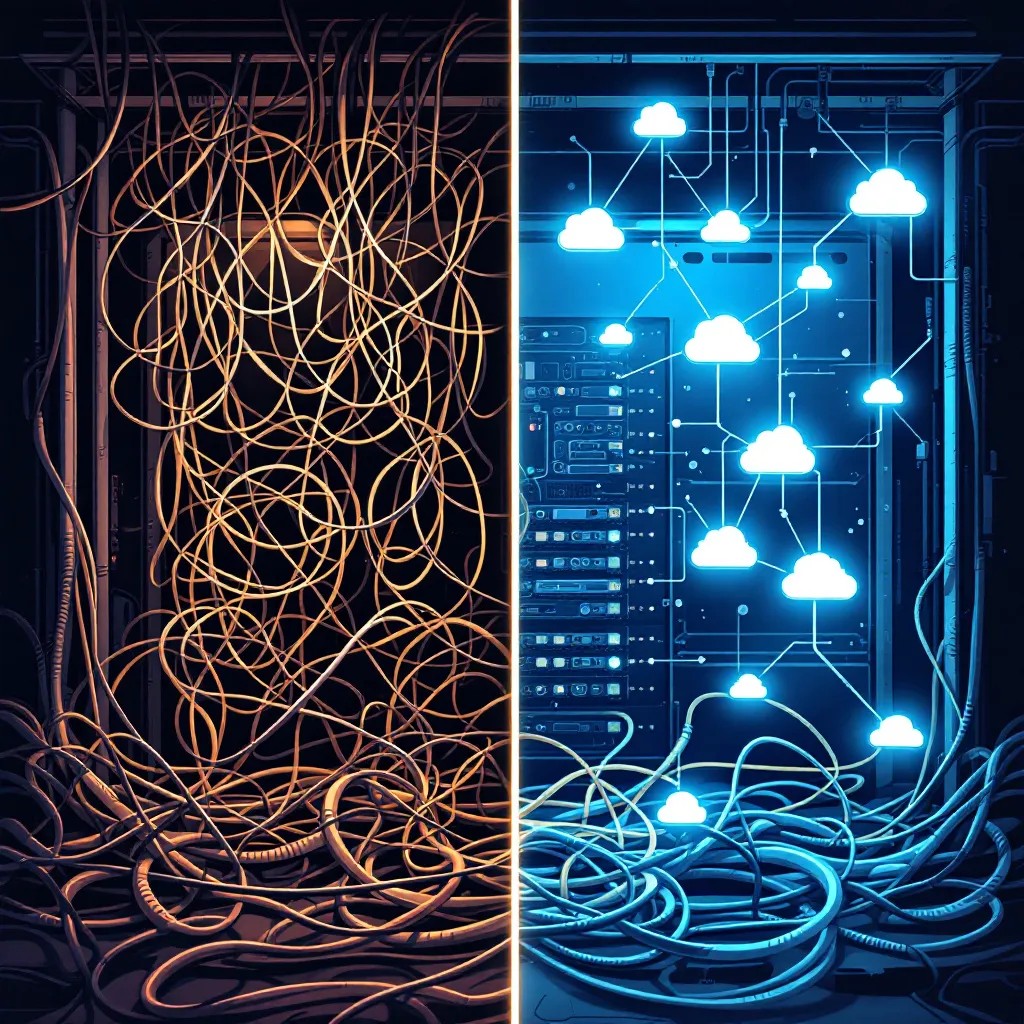

Ever tried to untangle a bowl of tightly knotted cables under your desk? That’s exactly what a tightly coupled architecture feels like—except you’re not just tripping on it, you’re betting your business on it. Honestly, after more than a decade and a half in cloud—and let me tell you, I’ve sat through more Well-Architected reviews than I can count—I’ve watched teams run smack into the same wall: their systems are so tangled up that even a small hiccup sends the whole thing sideways. But here’s the thing—it really doesn’t have to be a nightmare. There’s a much better way to do this. So, whether you’re up late cramming for the AWS SAA-C03 exam or you’re knee-deep in planning your next big application launch, these three things—scalability, loose coupling, and making sure your systems stay up even when the universe conspires against you (that’s high availability!)—they’re absolute must-haves. Don’t skip this. Let’s actually roll up our sleeves and unpack what all this means. I’m gonna sprinkle in a few war stories I’ve picked up along the way, walk you through some scribbles and practical setups, point out where things tend to blow up, and share those little exam nuggets that help you nail the test—and actually build systems that hang in there when life gets unpredictable.

So let’s dive in and really get our hands dirty with what matters most: scalability, loose coupling, and high availability—the AWS way.

Picture this: a retail website, all bundled up in one massive Java application, humming away on a single lonely EC2 box. Black Friday hits and—predictably—the site collapses under load. Why? Tight coupling and zero scalability. This isn’t rare. Getting your brain around how to really build scalable, decoupled, and tough-as-nails architectures on AWS? That’s not just exam cramming—this is absolutely make-or-break if you’re serious about putting together real-world, modern, cloud-native systems.

- Scalability: Can your app handle a surge in users? Horizontal scaling (adding more compute, e.g., EC2s, Lambdas) beats vertical scaling (bigger instances) on AWS for cost, reliability, and automation. Scaling policies are set using metrics like CPU, request count, or queue depth.

- Loose Coupling: Are components communicating via well-defined interfaces (APIs, queues, events), or are they tightly bound? When you build things to be loosely coupled, each part can do its own thing—scale up, take a break, or even get a makeover—without dragging the whole system down with it. One piece changes? The others barely bat an eye. No chain reactions, no 3am fire drills because one little update just torpedoed the whole app. It’s just so much easier to change stuff safely.

- High Availability & Fault Tolerance: What if an instance, AZ, or region fails? HA means your system keeps running, and fault tolerance means it recovers gracefully. AWS enables this through Multi-AZ deployments, Multi-Region patterns, failover strategies, and robust automation.

Priya’s Principle: “If any one piece of your system can take the whole thing down, you haven’t architected for the cloud—you’ve just moved your legacy pain to someone else’s data center.”

Alright—let’s actually see the difference between a system where everything clings together for dear life, vs. one where each piece can breathe on its own:

Here’s the classic ‘oh no’ setup—where every part is glued to the next, so if one slips, they all tumble: +--------+ +--------+ +--------+ | Front |<-->| Logic |<-->| DB | | End | | Layer | | | +--------+ +--------+ +--------+ All components depend directly on each other. Check this out instead: every piece just does its thing, drops what it’s finished into a queue, and honestly couldn’t care less if the next guy down the line decides to take a coffee break. +--------+ +--------+ +--------+ | Front |--->| Queue |--->| Worker | | End | | (SQS) | | (Lambda) +--------+ +--------+ +--------+ If any one part stumbles—maybe your worker falls over or just gets super slow—the rest of the app just shrugs and keeps chugging along like nothing happened. Everybody else just keeps cruising along like nothing happened.

So, what AWS tools do you reach for to actually pull off these decoupled, scalable designs?

AWS is basically a playground stacked with everything you need for breaking your app into manageable, scale-friendly pieces—and making sure it all keeps rolling when something goes sideways. Here’s a summary table of the most exam-relevant ones, followed by practical highlights.

| Service | Scaling | Coupling | HA/DR | Best Use |

|---|---|---|---|---|

| EC2 + ASG | Horizontal (ASG) | Stateful/Stateless | Multi-AZ, manual Multi-Region | Custom compute, legacy apps |

| Lambda | Auto, serverless | Stateless | Multi-AZ | Event/microservice/automation |

| ECS/EKS | Service auto scaling | Microservices, containers | Multi-AZ, blue/green | Container orchestration |

| S3 | Virtually unlimited | Loose (REST/API) | Cross-region replication | Object storage, backups |

| DynamoDB | Auto, on-demand | Stateless | Multi-AZ/Global Table | NoSQL, high perf data |

| RDS/Aurora | Read replicas, serverless | Less loose (SQL) | Multi-AZ, Aurora Global DB | Relational data |

| SQS/SNS | Auto, partitioned | Loose (async) | Multi-AZ | Decoupling, events |

| EventBridge | Auto | Events | Multi-AZ | Event-driven microservices |

| API Gateway | Auto, throttling | REST, events | Multi-AZ | API endpoints, auth |

- EC2 & Auto Scaling Groups (ASG): Core compute—use ASG for dynamic horizontal scaling, lifecycle hooks for blue/green and updates, and mixed instance policies (combine On-Demand and Spot for cost).

- Lambda: Serverless, scales automatically, integrates natively with SQS, SNS, EventBridge. Use provisioned concurrency to pre-warm functions for low-latency, and reserved concurrency for QoS control. Account-level concurrency limits apply—plan accordingly!

- ECS/EKS: For containers—ECS (Fargate or EC2 launch types) and EKS (managed Kubernetes). And honestly, both ECS and EKS are loaded with the good stuff—automatic service discovery, out-of-the-box load balancing (yep, ALB or NLB), and scaling that takes care of itself so you can actually go get coffee, not just watch dashboards. ECS plays nice with SQS too, so if you’ve got stuff that needs to run in the background or asynchronously, you’re covered.

- S3: Infinite object storage. Enable default encryption per bucket, configure lifecycle policies for cost control, and set up cross-region replication for DR.

- DynamoDB: Serverless NoSQL. Use on-demand for unpredictable spikes, global tables for multi-region. Partition key design is critical—avoid “hot” partitions. Streams provide change data capture (CDC; after-image only).

- RDS/Aurora: Managed relational DBs. RDS supports Multi-AZ failover and read replicas; Aurora adds auto-repair, serverless mode (v2 preferred for HA), and Aurora Global Database (MySQL/Postgres only).

- SQS/SNS: SQS for reliable message queuing (decouples producers/consumers); DLQs handle poison messages. SNS, on the flip side, is all about fanning out messages—think of it as your event megaphone, blasting notifications to every subscriber you can imagine, whether that’s SQS, Lambda, or even some webhook out in the wild.

- EventBridge: Modern event bus for event-driven architectures, supports SaaS integrations, event filtering, and replay.

- API Gateway: Front-door for APIs. REST, HTTP, and WebSocket APIs; HTTP API supports gRPC over HTTP/2 (with some feature gaps). Integrates with Lambda, ALB, or private VPC backends. Supports usage plans, throttling, quotas, and WAF integration.

- VPC & Endpoints: VPC endpoints (Gateway for S3/DynamoDB, Interface for most others via PrivateLink) keep traffic private. Oh, and don’t forget to lock things down—security groups, network ACLs, and VPC Flow Logs are key if you want to know who did what and keep the bad actors out.

- Step Functions: Serverless workflow orchestrator—chains Lambda/ECS/Glue/SageMaker. What I love is that Step Functions handles the messy parts—automatic retries, error handling, moving data along, and even gives you a nice visual flow so you can see what’s happening.

- ElastiCache/DAX: Caching layers (Redis/Memcached, DAX for DynamoDB) to reduce latency and offload backend.

- Kinesis/MQ: For streaming ingestion, real-time analytics, or when advanced queueing/ordering is needed.

Exam Watch: “SAA-C03 expects you to recognize which services natively scale, which need explicit configuration, and how each contributes to loose coupling and HA.”

AWS Architectural Patterns for Scalability & Loose Coupling

True story: I once inherited a “microservices” app where every service called every other synchronously, no retries, no fallback. One downstream hiccup? System-wide lockup. That’s a distributed monolith. Let’s walk through real scalable patterns:

- Microservices: Each domain function is a discrete service (ECS/EKS, Lambda). Let your microservices talk using REST APIs (via API Gateway), toss around events with EventBridge, or hand off work through SQS queues—you’ve got options. And seriously—don’t stash important state in RAM or on a local disk. Honestly, just hand off the heavy lifting to the managed databases. They’re designed for this, and you’ll save yourself a lot of late-night stress.

- Event-Driven: Services emit events (EventBridge, SNS, DynamoDB Streams). The beauty? And the cool part? Whoever’s interested in those events can pick them up and do their thing, whenever they’re ready—no rush or waiting in line. You want to scale? Just add more consumers, no need to poke the code that’s spitting out the events.

- Queue-Based Decoupling: Decouple producers/consumers with SQS. Don’t forget—dead-letter queues are like the seatbelts for your messages, batch processing lets you move more stuff in one go, and if you need things in a certain order, FIFO is your friend (just keep an eye on those throughput numbers—it’s easy to hit the cap).

- Orchestrated Workflows: Chain Lambda or ECS tasks with Step Functions. No need to hand-code retries or special branches for errors—Step Functions has all that covered, and you can even pass data straight through the chain.

- API-Based Integration: Expose APIs via API Gateway/ALB. And please—don’t forget your safeguards: put limits on how often people can hit your APIs, switch on caching for frequently called REST endpoints, and use robust authentication (Cognito, custom Lambda authorizers, or straight-up IAM for internal services).

Pro Tip: “Regardless of pattern, always design for retries, dead-letter queues, and robust monitoring. Without them, silent failures can snowball into outages.”

Let’s check out a simple high-level AWS setup with some real decoupling muscle:

+-----------+ +-------------+ +----------+ +-------------+ | API GW | --> | SQS/SNS | --> | Lambda | --> | DynamoDB/RDS| +-----------+ +-------------+ +----------+ +-------------+ | | | | Client Message Bus Processing Data Store

Figure 1: Scalable, decoupled AWS architecture pattern

Advanced Event-Driven Pattern Example

[Event Source] --> [EventBridge] --+--> [Lambda Consumer 1] | +--> [Lambda Consumer 2] | +--> [SQS DLQ for failed events]

Figure 2: EventBridge-based event routing with DLQ

Implementing Decoupling: Compute, Storage, and Data Tiers

A common pitfall: storing session state on EC2 disk, then wondering why scaling breaks user experience. Statelessness is essential! Here’s how to decouple tiers:

- Compute: Use ASG (for EC2/ECS) or Lambda. Avoid storing state locally; use external stores for session/data.

- Storage: S3 for object storage (enable lifecycle and replication). EFS for POSIX file needs (rare in stateless apps). When you’ve got structured data to save, DynamoDB’s your best bet for NoSQL, but if you want classic SQL, just roll with RDS or Aurora and let AWS sweat the details—you don’t need the drama.

- Data: DynamoDB streams for event sourcing, Aurora Global Database for cross-region, S3 cross-region replication for DR. Remember: not all services natively support multi-region (Aurora Global DB is MySQL/Postgres only).

Let’s walk through a quick example: an order processing pipeline that’s actually decoupled using SQS and SNS.

- User submits order via web frontend → API Gateway.

- API Gateway pushes message onto SQS (SQS triggers Lambda).

- Lambda validates/processes, writes to DynamoDB, and publishes to SNS for notifications.

- And the best part? If any single part needs to grow, shrink, or has a hiccup—it doesn’t ruin the rest. DLQs are there to snag any troublemakers that fall through the cracks.

Okay, let’s actually see how this all fits in CloudFormation. You’ll wire up your SQS queues, Lambda, API Gateway, DynamoDB table, and—don’t forget—a DLQ, so you can actually get some sleep at night.

Resources: OrderQueue: Type: AWS::SQS::Queue # SQS queue for incoming orders Properties: QueueName: "OrderQueue" RedrivePolicy: deadLetterTargetArn: !GetAtt OrderDLQ.Arn maxReceiveCount: 5 OrderDLQ: Type: AWS::SQS::Queue # SQS queue for incoming orders Properties: QueueName: "OrderDLQ" OrderTable: Type: AWS::DynamoDB::Table # DynamoDB table to store order data Properties: TableName: "Orders" # Name for your orders table AttributeDefinitions: - AttributeName: orderId AttributeType: S KeySchema: - AttributeName: orderId KeyType: HASH BillingMode: PAY_PER_REQUEST # Pay only for what you use, no capacity planning nightmares OrderSNSTopic: Type: AWS::SNS::Topic # SNS topic for sending notifications Properties: TopicName: "OrderNotifications" LambdaExecutionRole: Type: AWS::IAM::Role # IAM role for Lambda execution Properties: AssumeRolePolicyDocument: Version: '2012-10-17' # Standard policy version formatting Statement: - Effect: Allow Principal: Service: lambda.amazonaws.com Action: sts:AssumeRole Policies: - PolicyName: "OrderLambdaPolicy" PolicyDocument: Version: "2012-10-17" # Policy doc version—don’t mess this up Statement: - Effect: Allow Action: [dynamodb:PutItem, dynamodb:GetItem] # Let Lambda read/write orders Resource: "*" - Effect: Allow Action: [sqs:ReceiveMessage, sqs:DeleteMessage, sqs:GetQueueAttributes] # Let Lambda consume messages from SQS Resource: "*" - Effect: Allow Action: [sns:Publish] Resource: "*" OrderLambda: Type: AWS::Lambda::Function Properties: Handler: index.handler Runtime: python3.11 Role: !GetAtt LambdaExecutionRole.Arn Code: S3Bucket: my-lambda-functions # Where your Lambda code is parked S3Key: order-processor.zip Environment: Variables: TABLE_NAME: !Ref OrderTable SNS_TOPIC_ARN: !Ref OrderSNSTopic ReservedConcurrentExecutions: 10 LambdaSQSTrigger: Type: AWS::Lambda::EventSourceMapping Properties: BatchSize: 5 EventSourceArn: !GetAtt OrderQueue.Arn FunctionName: !GetAtt OrderLambda.Arn Enabled: true

CloudFormation: Full pipeline with execution role, DLQ, and environment variables

Sample Lambda Handler: Idempotency & Notification

import os, boto3, json # Standard Python imports for AWS Lambda handler dynamodb = boto3.resource('dynamodb') # DynamoDB client for table access table = dynamodb.Table(os.environ['TABLE_NAME']) # Grab your orders table by env variable) sns = boto3.client('sns') # SNS client for notifications SNS_TOPIC_ARN = os.environ['SNS_TOPIC_ARN'] # Get the SNS topic ARN from environment def handler(event, context): # Lambda entrypoint—processes a batch of messages for record in event['Records']: order = json.loads(record['body']) order_id = order['orderId'] # Grab the order ID from the incoming payload # Idempotency magic: only write if this order isn’t already in the table try: table.put_item( Item=order, ConditionExpression='attribute_not_exists(orderId)' ) except dynamodb.meta.client.exceptions.ConditionalCheckFailedException: # Already wrote this order before continue # Already processed sns.publish(TopicArn=SNS_TOPIC_ARN, Message=json.dumps(order)) # Fire off a notification) return {'statusCode': 200}

Python Lambda: Idempotent writes and event publishing, batch-aware for SQS triggers.

Key Implementation Notes

- Lambda is invoked (not polling) by SQS via event source mapping; batch size affects performance and error handling.

- DLQ is attached to SQS. Failed messages after retries are routed to OrderDLQ for analysis.

- SNS_TOPIC_ARN must be set as an environment variable for notification to work.

- Use

ReservedConcurrentExecutionsto cap Lambda concurrency; use Provisioned Concurrency if you need to eliminate cold start latency.

Designing for High Availability and Disaster Recovery

A startup learned the hard way when all their DBs lived in one AZ—an outage wiped them out for hours. Avoid this with proper DR and HA strategies:

- Multi-AZ Deployments: RDS, Aurora, and EC2 ASGs support automatic Multi-AZ failover. Always enable for stateful services.

- Multi-Region Deployments: Use Route 53 DNS failover, S3 cross-region replication (must be configured), Aurora Global DB (MySQL/Postgres), and DynamoDB Global Tables for HA. Test your failover! Use AWS Fault Injection Simulator for chaos engineering.

- Backups & Snapshots: Automate RDS, S3, and DynamoDB backups. Store copies in a separate region if possible. Use S3 lifecycle policies to manage cost and retention.

- DR Patterns:

- Pilot Light: Core infra pre-provisioned, scaled up on disaster. Cost-effective, slower RTO.

- Warm Standby: Active, scaled-down copy always running; faster switch, higher cost.

- Active-Active: Both regions fully active; lowest RTO, highest cost.

| Pattern | RTO | RPO | Cost | Example |

|---|---|---|---|---|

| Pilot Light | Hours | Minutes-Hours | Low | S3 CRR, small DB in standby |

| Warm Standby | Minutes | Minutes | Medium | Scaled-down app/DB in 2nd region |

| Active-Active | Seconds | Near-zero | High | DynamoDB Global Tables, Aurora Global DB |

Exam Watch: “Know which services support Multi-AZ and Multi-Region natively. For example: RDS (Multi-AZ), Aurora Global DB (Multi-Region, MySQL/Postgres only), S3 (CRR, must configure), DynamoDB (Global Tables).”

Security and Compliance Best Practices

- IAM Roles & Policies: Use least privilege. Prefer roles (not users) with short-lived credentials. Use Service Control Policies (SCPs) at the Org level for guardrails. Detect risky permissions with Access Analyzer.

- Cross-Account Access: Use resource-based policies (e.g., S3, Lambda), roles, and external IDs. Don’t share IAM users between accounts.

- Encryption Everywhere: Enable default encryption on S3 buckets, use KMS (customer-managed keys preferred for audit), and enforce TLS for all endpoints. Audit keys and usage regularly.

- VPC Endpoints & PrivateLink: Use Gateway Endpoints (S3/DynamoDB) and Interface Endpoints (other AWS services, SaaS) to keep traffic private and reduce attack surface.

- Secrets Management: Store secrets in AWS Secrets Manager or SSM Parameter Store; never in code or environment variables.

- Compliance & Auditing: Use CloudTrail (API auditing), AWS Config (resource compliance), and Artifact for compliance reports.

Pro Tip: “Automate IAM, VPC, and encryption setup via CloudFormation or Terraform. Regularly review with Access Analyzer and Config rules to detect drift or risk.”

Sample: VPC Endpoint for S3 (Gateway)

Resources: S3VPCEndpoint: Type: AWS::EC2::VPCEndpoint Properties: ServiceName: !Sub com.amazonaws.${AWS::Region}.s3 VpcId: vpc-123abc RouteTableIds: - rtb-456def VpcEndpointType: Gateway

CloudFormation: VPC Gateway Endpoint for S3

Monitoring, Logging, Troubleshooting, and Cost Optimization

- CloudWatch: Collects logs, metrics, and alarms. Create dashboards for EC2, Lambda, RDS, SQS, etc. Set alarms on queue depth, Lambda errors, or DB throttling. Use Anomaly Detection for unusual patterns.

- X-Ray: Distributed tracing. Enable tracing in Lambda and API Gateway to visualize call flows and detect bottlenecks.

- Log Aggregation & Correlation: Use structured logging (JSON, correlation IDs), aggregate with CloudWatch Logs Insights or OpenSearch for cross-service diagnostics.

- Alerting: Setup alarms for high error rates, slow responses, budget thresholds (via AWS Budgets), and service quotas via Trusted Advisor or Compute Optimizer.

- Cost Control: Right-size instances with Compute Optimizer, leverage Savings Plans/Reserved Instances for steady workloads, and use Spot with On-Demand fallback (via ASG mixed instances). Use AWS Cost Explorer for analysis and tagging for allocation.

- Service Quotas: Proactively check and request increases for Lambda concurrency, SQS throughput, DynamoDB WCU/RCU, and other limits in production.

Sample: CloudWatch Alarm for SQS Queue Depth

Resources: SQSQueueDepthAlarm: Type: AWS::CloudWatch::Alarm Properties: AlarmDescription: "SQS queue depth exceeds threshold" Namespace: "AWS/SQS" MetricName: "ApproximateNumberOfMessagesVisible" Dimensions: - Name: QueueName Value: !GetAtt OrderQueue.QueueName Statistic: "Average" Period: 60 EvaluationPeriods: 2 Threshold: 100 ComparisonOperator: "GreaterThanThreshold" AlarmActions: - arn:aws:sns:REGION:ACCOUNT_ID:AlarmTopic

CloudFormation: CloudWatch alarm for SQS queue depth

Priya’s Principle: “If you can’t see it, you can’t fix it. Logging costs money, but downtime or lost data costs more. Invest in monitoring and alerting up front.”

Containerized Microservices on AWS

Containers are a powerful way to build scalable, loosely coupled systems. AWS supports this via ECS (native, Fargate/EC2) and EKS (Kubernetes).

- ECS: Easiest path if you’re new to containers. Use Fargate for serverless compute, EC2 for custom AMIs. Integrates with ALB/NLB, Service Discovery, SQS, and EventBridge for advanced patterns.

- EKS: Fully managed Kubernetes. Use for advanced orchestration, blue/green deployment (via Kubernetes Deployments), and hybrid/multi-cloud.

- Scaling: ECS/EKS services scale tasks/pods based on CPU, memory, custom metrics, or SQS queue depth (with KEDA/ECS Service Auto Scaling).

- HA: Deploy tasks/pods across multiple AZs, use ALB/NLB for resilient ingress.

Hands-On Lab: ECS Service with ALB, SQS Decoupling

Deploy an ECS cluster (Fargate), create an ALB, and configure an ECS service with an SQS-backed queue for decoupled processing. Auto scale tasks based on queue depth metric.

- Create ECS Fargate cluster and service.

- Configure ALB listener to target ECS service.

- Create SQS queue, grant ECS task role SQS permissions.

- Configure CloudWatch alarm to scale ECS tasks based on

ApproximateNumberOfMessagesVisible.

Advanced Event-Driven Architectures

- Kinesis Data Streams: Use for high-throughput, ordered, real-time event ingestion (IoT, analytics). Lambda or custom consumers process events. Kinesis Firehose delivers to S3, Redshift, or OpenSearch.

- EventBridge: Central event bus, connects AWS services and SaaS. Use schema registry for event validation, event replay for troubleshooting.

- Step Functions: Orchestrate multi-step, multi-service workflows. Built-in error handling, retry logic, and state passing.

Hands-On: Configure EventBridge rule to trigger Lambda and send failed events to SQS DLQ. Replay failed events for recovery/testing.

Caching and Data Acceleration

- ElastiCache (Redis/Memcached): Offload frequent reads from DB, reduce latency in session stores, leaderboards, or caching API responses.

- DAX for DynamoDB: In-memory cache for DynamoDB, microsecond read performance, seamless API.

- API Gateway Caching: Enable response caching for REST APIs to reduce backend load, configurable per method or path.

Zero Trust Security Patterns

- Network Segmentation: Isolate workloads with VPC, subnets, and security groups. Use NACLs for stateless filtering.

- Private API Gateway Endpoints: Expose APIs only within VPCs using interface endpoints.

- VPC Service Controls: For organizations, restrict which services and resources can be accessed from each VPC/account.

Cost Management and Billing Alarms

- AWS Budgets: Set cost and usage budgets—trigger alerts via SNS.

- Cost Explorer: Analyze spend trends, detect anomalies, and optimize reserved instance/Savings Plan usage.

- Tagging Strategy: Tag resources for allocation, compliance, and chargeback reporting.

Sample: AWS Budgets Alarm

aws budgets create-budget --account-id 123456789012 --budget file://budget.json

CLI: Create a cost budget with alert

Troubleshooting, Anti-Patterns, and Diagnostic Procedures

- Anti-Pattern 1: Tight Coupling: Synchronous service calls, no retries. Remedy: Use SQS/Events, async patterns.

- Anti-Pattern 2: Single Point of Failure: One EC2/AZ. Remedy: Multi-AZ ASG/Lambda, DR failover.

- Scaling Gaps: Auto Scaling only on CPU, queue backlog grows. Remedy: Scale on multiple metrics (CPU, SQS queue depth).

- DLQ Usage: Missing DLQs can result in lost/blocked messages. Always configure DLQs for SQS, and for Lambda asynchronous invocations if needed.

Troubleshooting Checklist for Distributed AWS Apps

- Check CloudWatch metrics for spikes, errors, or timeouts.

- Review X-Ray traces for latency sources and bottlenecks.

- Correlate logs using request/correlation IDs across services.

- Check SQS DLQ for failed messages; analyze, replay if needed.

- Verify IAM policies for recent changes or overbroad permissions.

- Inspect service quotas and raise as needed before scaling events.

Diagnostic Flowchart: Lambda Processing Failure

+---------------------+ | Lambda fails event | +---------+-----------+ | [Check CloudWatch] | +---------+----------+ | Error logs present?| +---------+----------+ | | Yes No | | [Analyze stacktrace] [Enable detailed logging] | [Check DLQ/SQS redrive] | [Replay/reprocess]

Performance Optimization and Scaling Tips

- Auto Scaling Policies: Blend CPU, network, and queue depth. Use step/target tracking policies. Test with load generators.

- Lambda Tuning: Use provisioned concurrency for cold start-sensitive functions, reserved concurrency to protect critical workloads. Throttle to avoid DB overrun.

- DynamoDB Partitioning: Use random suffixes or hash keys to avoid hot partitions. Set auto scaling for WCU/RCU. Monitor with CloudWatch metrics.

- SQS Tuning: Batch size (1–10), visibility timeout (set > Lambda processing time), DLQ for poison messages.

- API Gateway Throttling: Set per-method quotas/throttles. Use caching for read-heavy endpoints.

- Service Limit Awareness: Check AWS Service Quotas before production launches (Lambda concurrency, SQS TPS, DynamoDB throughput).

Scaling and Throughput Comparison Table

| Service | Scaling Mechanism | Throughput Limit | Notes |

|---|---|---|---|

| Lambda | Auto, account limit | 1000 concurrent (default) | Request increase for large workloads |

| SQS Std | Auto | Unlimited (practically 3000+ TPS) | Batch size up to 10 |

| SQS FIFO | Auto | 300 / 3000 TPS (without/with batching) | Maintains strict order |

| DynamoDB | Auto/on-demand | Depends on WCU/RCU | Partition key design critical |

Pro Tip: “Monitor your scaling events. Spiky scaling equals spiky bills. Use cooldowns to smooth scaling and avoid thrash.”

Real-World Industry Scenarios: Case Studies

E-commerce Order Pipeline: During holiday spikes, a retailer’s monolith choked on order surges. Rebuilt with API Gateway → SQS → Lambda → DynamoDB, plus SNS for notifications. Result: Orders processed reliably at 10x prior peak, with zero downtime. DR via S3 CRR and DynamoDB Global Tables.

IoT Data Ingestion: A fleet of devices sent telemetry to API Gateway. EventBridge routed events to Lambda for real-time processing and S3 for archival. SQS and DLQ handled processing spikes and failures. Result: Scalable to millions of events/hour, with rapid recovery from regional issues.

Global SaaS Platform: SaaS app redeployed using Route 53 latency routing, DynamoDB Global Tables for user state, and S3 CRR for file backup. Global Accelerator reduced latency. Routine DR drills using Fault Injection Simulator ensured business continuity.

Exam Preparation: SAA-C03 Focus Areas and Study Tips

Common SAA-C03 Exam Gotchas

- Look for loose coupling in scenario answers: queues, async events, stateless compute.

- Understand which services natively scale (Lambda, S3, DynamoDB) and which require configuration (EC2, RDS).

- Recognize HA patterns: Multi-AZ (RDS, ASG), Multi-Region (Aurora Global DB, DynamoDB), failover with Route 53.

- When to use DLQs, retries, and idempotency—especially for Lambda/SQS integrations.

- Identify least privilege IAM, encryption defaults, and VPC endpoint use cases.

- Distinguish between REST, HTTP, and WebSocket APIs in API Gateway, including when to use caching, throttling, and WAF.

- Be aware of service quotas and scaling limits in high-load scenarios.

- Spot common anti-patterns: tight coupling, open S3 buckets, overprivileged IAM, no monitoring.

Sample Scenario and Analysis

Scenario: A social media startup expects viral growth and needs to ingest unpredictable bursts of user uploads. They want zero downtime, automated scaling, and the ability to add downstream analytics without impacting upload speed.

- A. API Gateway → EC2 → RDS

- B. API Gateway → S3 (for files) + SQS → Lambda → DynamoDB → SNS

- C. Direct upload to EC2 server, which writes to S3

Best Answer: B – Loosely coupled (SQS decouples upload from processing), scalable (Lambda/DynamoDB/S3), enables downstream analytics via SNS, and no single point of failure.

Study Checklist

- Deploy and break/fix an SQS/Lambda/DynamoDB pipeline. Practice recovery.

- Automate with CloudFormation or Terraform—memorize by doing.

- Draw architecture diagrams for exam scenarios.

- Read the AWS Well-Architected Framework—focus on operational excellence, reliability, and security pillars.

- Review AWS documentation on scaling, DR, and security best practices.

- Join study groups—teaching reinforces knowledge.

Quick Reference:

- Multi-AZ: RDS, Aurora, DynamoDB, Lambda, S3 (implied within region)

- Multi-Region: Aurora Global DB (MySQL/Postgres), DynamoDB Global Tables, S3 (with CRR)

- DLQ: Always for SQS, Lambda async

- Idempotency: Use conditional writes in DynamoDB

- Encryption: S3/KMS, RDS/Aurora, DynamoDB, EBS default encryption

Wrapping Up: The Real Key to AWS Architecture Mastery

Great AWS architectures are less about which service you pick and more about strategy and habits: design for loose coupling, automate scaling and recovery, build for failure, and monitor everything. These aren’t just best practices for the exam—they’re your keys to sleeping soundly in production.

If you’re prepping for the SAA-C03, focus on recognizing and rationalizing these patterns in scenario questions. AWS wants you to architect like a cloud native, not just stack services. And if you’re building for production, always test your assumptions—failover, scaling, and DR aren’t real until you’ve proven them.

Got stories of your own, or want to share a tricky design challenge? I love learning from other practitioners—send your questions, war stories, or feedback. Let’s keep raising the bar for cloud architecture, together.

Stay well-architected, and happy building!